What You Need To Know About Non-Consensual Sexual Deepfakes

This inforgraphic focuses on the non-consensual use of adults' images and videos in the production and distribution of sexual deepfakes.

View Infographic (PDF)

View Infographic (PNG)

View Plain Text (PDF)

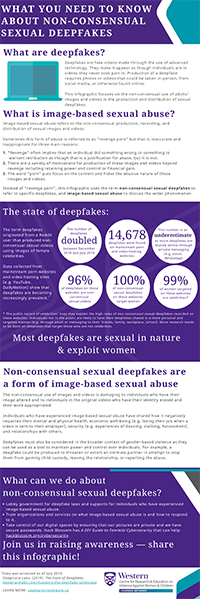

WHAT YOU NEED TO KNOW ABOUT NON-CONSENSUAL SEXUAL DEEPFAKES

What are deepfakes?

Deepfakes are fake videos made through the use of advanced technology. They make it appear as though individuals are in videos they never took part in. Production of a deepfake requires photos or videos that could be taken in-person, from social media, or otherwise found online. This infographic focuses on the non-consensual use of adults' images and videos in the production and distribution of sexual deepfakes.

What is image-based sexual abuse?

Image-based sexual abuse refers to the non-consensual production, recording, and distribution of sexual images and videos. Sometimes this form of abuse is referred to as "revenge porn" but that is inaccurate and inappropriate for three main reasons:

1. "Revenge" often implies that an individual did something wrong or something to warrant retribution as though that is a justification for abuse, but it is not.

2. There are a variety of motivations for production of these images and videos beyond revenge including retaining power and control or financial gain.

3. The word "porn" puts focus on the content and hides the abusive nature of these images and videos.

Instead of "revenge porn", this infographic uses the term non-consensual sexual deepfakes to refer to specific deepfakes, and image-based sexual abuse to discuss the wider phenomenon.

The state of deepfakes:

The term deepfakes originated from a Reddit user that produced non-consensual sexual videos using images of female celebrities.Data collected from mainstream porn websites and video hosting sites (e.g. YouTube, DailyMotion) show that deepfakes are becoming increasingly prevalent.1

- The number of deepfakes doubled between December 2018 and July 2019

- 14,678 deepfakes were found on mainstream porn and video hosting websites

- This number is an underestimate as more deepfakes are shared online through private messaging (e.g. email, WhatsApp) 1 Data was accessed as of July 2019. Deeptrace Labs. (2019). The State of Deepfakes.

- 96% of deepfakes on these websites are non-consensual sexual videos

- 100% of non-consensual sexual deepfakes on these websites target women

- 99% of women targeted on these websites are celebrities*

* The public nature of celebrities’ lives may explain the high rates of non-consensual sexual deepfakes recorded on these websites. Individuals not in the public are likely to have their deepfakes shared in a more personal and targeted manner (e.g. through email or messaging to their friends, family, workplace, school). More research needs to be done on deepfakes that target those who are not celebrities.

Most deepfakes are sexual in nature & exploit women.

Non-consensual sexual deepfakes are a form of image-based sexual abuse

The non-consensual use of images and videos is damaging to individuals who have their image altered and to individuals in the original videos who have their identity erased and their work appropriated.Individuals who have experienced image-based sexual abuse have shared how it negatively impacted their mental and physical health, economic well-being (e.g. losing their job when a video is sent to their employer), security (e.g. experiences of doxxing, stalking, harassment), and relationships with others.

Deepfakes must also be considered in the broader context of gender-based violence as they can be used as a tool to maintain power and control over individuals. For example, a deepfake could be produced to threaten or extort an intimate partner in attempt to stop them from gaining child custody, leaving the relationship, or reporting the abuse.

What can we do about non-consensual sexual deepfakes?

- Lobby government for deepfake laws and supports for individuals who have experienced image-based sexual abuse.

- Train organizations and services on what image-based sexual abuse is and how to respond to it.

- Take control of our digital spaces by ensuring that our pictures are private and we have secure passwords. Hack Blossom has A DIY Guide to Feminist Cybersecurity that can help: www.hackblossom.org/cybersecurity